Learning Data-Driven Traffic Simulation

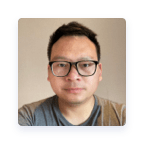

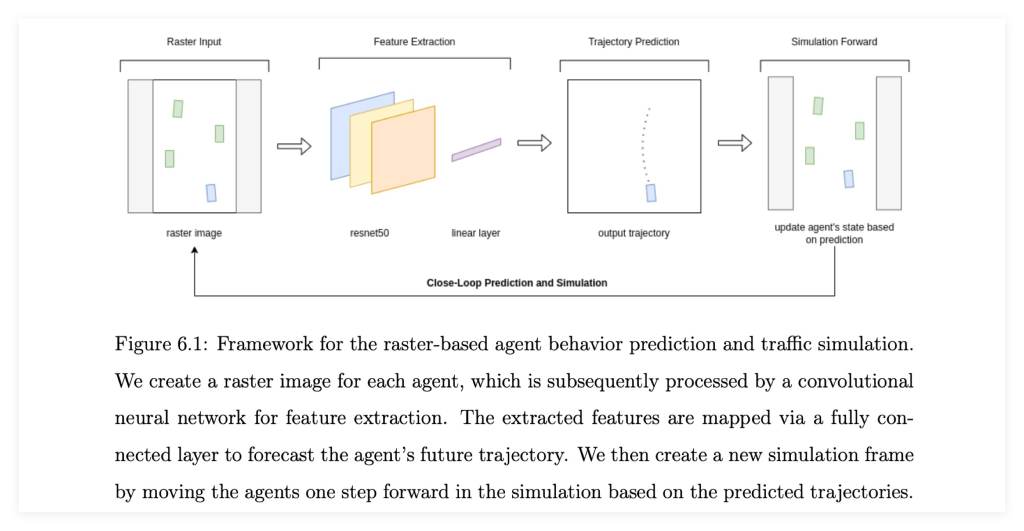

This project learns a data-driven traffic simulation agent model for autonomous driving planning evaluation. To capture traffic interactions, map topology, and dynamic traffic information like traffic lights, we use a multi-channel raster image centered around the focus agent to represent the driving situation. A deep convolutional neural network is used to extract features from the raster image and output the future trajectory for the focus agent. The model is trained in a supervised learning way to minimize differences between predicted and ground truth trajectories, and avoid conflicting predictions within one scenario. To alleviate the distribution shift issue during closed-loop testing, we also added some kinematically feasible perturbed trajectories to the training data to help the model learn to recover from undesired driving situations (e.g., driving off-road). We train and evaluate our model based on 510,000 20-second scenarios in the nuPlan dataset provided by Motional. Open-loop prediction evaluation and closed-loop simulation evaluation are conducted to verify the model performance. For the open-loop part, the model has an ADE of 1.7 meters and an FDE of 3.7 meters for a 6-second prediction horizon. For the closed-loop part, the model can simulate diverse driving interactions like stopping at red traffic lights, yielding to other vehicles during right-turn-on-red, and car following.